Dr Aruna Dayanatha PhD

The Natural Arrival of New Agents

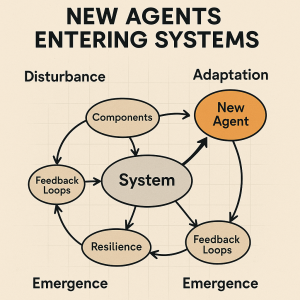

In systems theory, an agent is any entity that can act, interact, and adapt within a system. Traditionally, humans have been recognized as agents alongside organizations, institutions, and natural actors. Today, with the rise of artificial intelligence, AI systems are also entering as agents. From a pure systems perspective, this is not an anomaly or a disruption—it is a natural phenomenon. Systems are not static; they evolve by absorbing new agents, which extend, moderate, or sometimes disrupt existing capabilities.

Human Agents and Their Reactions

When a new agent enters a system, human agents typically respond with mixed reactions:

- Resistance: Fear of being displaced or losing value within the system.

- Adaptation: Adjusting roles to collaborate with or oversee the new agent.

- Redefinition: Reinterpreting one’s identity and contribution in light of new systemic dynamics.

These reactions are understandable but reflect human-centered bias. The system itself does not distinguish between human and AI—it only registers functional roles and interactions.

Systems Theories That Help Explain the Phenomenon

Several contemporary systems theories provide a deeper understanding of this dynamic:

- Complex Adaptive Systems (CAS): In CAS, systems are made up of multiple interacting agents whose behavior leads to emergent outcomes. The arrival of a new agent—such as AI—creates new interaction patterns that can destabilize the system initially but eventually result in new forms of order. For example, when AI entered e-commerce platforms, it initially disrupted traditional retail models but eventually created new ecosystems such as personalized recommendation engines and automated logistics.

- Sociotechnical Systems Theory: This theory emphasizes the joint optimization of human and technological subsystems. From this view, the introduction of AI should not be seen as a replacement for humans but as part of a reconfiguration that aligns technical and social elements to achieve better outcomes. A practical case is seen in manufacturing, where cobots (collaborative robots) work alongside human workers, increasing efficiency without removing human oversight.

- Soft Systems Methodology (SSM): SSM highlights that humans are carriers of worldviews. When AI enters as a new agent, different stakeholders may interpret its role differently: some see efficiency gains, others see threats. For instance, in the banking sector, executives may see AI chatbots as cost-saving, while front-line staff may fear reduced job security. Leaders must navigate these worldviews, using participatory approaches to design coherent system responses.

- Viable System Model (VSM): VSM suggests that for a system to remain viable, it must balance operations, coordination, intelligence, and policy. Introducing AI as a new agent can enhance intelligence and operational layers but also requires careful coordination. A good example is logistics networks where AI-driven route optimization supports operations but still requires central policy and governance to ensure fairness and compliance.

- Socio-Ecological Systems (SES) Framework: This framework stresses adaptability and governance in systems where multiple agents interact. Applied to organizations, it implies that AI agents must be governed with clear rules and incentives so that their actions contribute to sustainability and resilience. In healthcare, for instance, AI diagnostic tools add capability but need strong governance to ensure transparency, fairness, and trust.

How System Owners Should Interpret It

Owners, leaders, or stewards of systems must learn to interpret the entry of new agents without human bias:

- Capability Lens: Ask, “What new capabilities does this agent mediate or moderate within the system?” rather than “Is this agent replacing someone?”

- Adaptation Dynamics: Expect an initial period of disequilibrium followed by rebalancing and potential emergence of new capabilities.

- Risk–Resilience Balance: Recognize that new agents may both increase resilience (through diversity and redundancy) and introduce fragility (through complexity or dependence).

- Orchestration Role: Owners must act as orchestrators, ensuring all agents—human and non-human—are aligned toward the system’s purpose.

Conclusion

The entry of AI agents into human systems is neither a threat nor a miracle; it is a systemic event. Just as ecosystems adapt to new species, social and organizational systems adapt to new classes of agents. Contemporary systems theories and real-world examples remind us that the real challenge lies not in resisting the phenomenon, but in orchestrating it so that humans and AI together extend the frontier of system capability.