Dr. Aruna Dayanatha

In boardrooms and C-suites, leaders face high-stakes choices daily. With more data and perspectives to weigh than ever, these decisions have grown increasingly complex. Artificial intelligence (AI) can sift through oceans of information and offer insights in seconds, but it cannot match the wisdom, ethics, and contextual understanding of human executives. The real opportunity lies in combining the two. By integrating AI-generated meta-opinions — high-level insights synthesized by AI — with human judgment, organizations can make stronger, more strategic decisions.

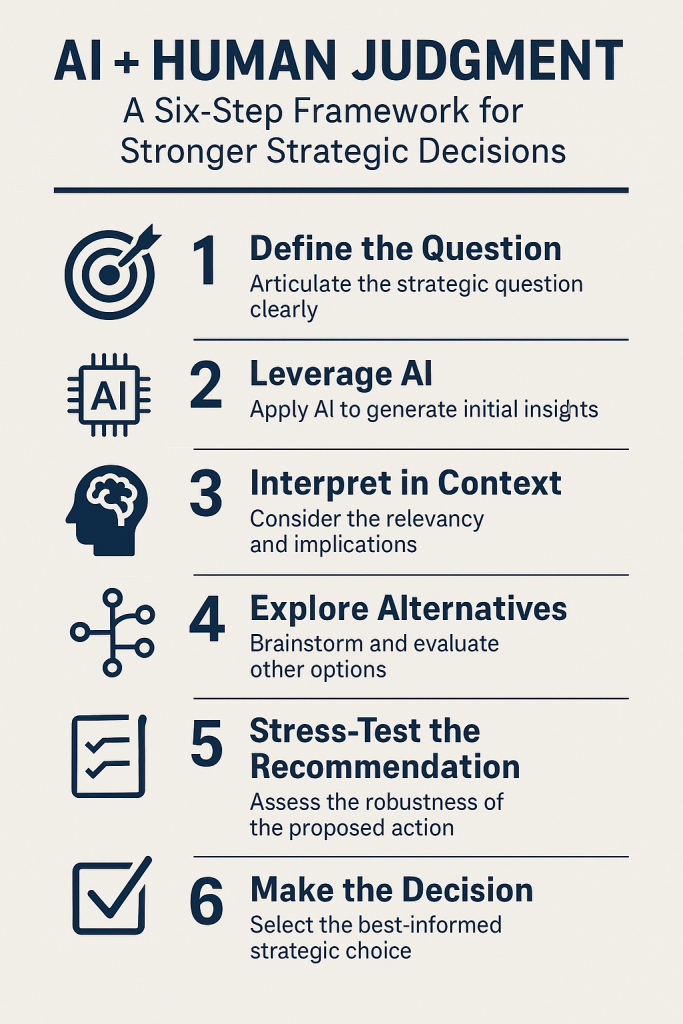

This article outlines a six-step AI-Informed Opinion Building Framework to put this idea into action, and explores the difference between human and AI opinions, the unique value each contributes, a real-world example of the framework in use, and the benefits and risks of blending AI insights with human decision-making.

Human vs. AI Opinions: Different Strengths, Better Together

AI and people approach decisions from different angles. AI excels at processing vast data and spotting patterns, providing rapid, objective insights. But AI lacks the nuanced understanding and ethical framework that humans bring. Humans, by contrast, draw on experience, intuition, and contextual awareness to judge ambiguous situations, yet we are prone to biases and limited in how much information we can handle. The two approaches naturally complement each other: AI’s analytic breadth and consistency combined with human depth and judgment create a stronger whole.

The Unique Value AI Brings

- Breadth and Speed: AI can scan enormous ranges of information – reports, customer data, market trends – in seconds. It provides a panoramic fact base that no human could compile in the same time.

- Analytical Depth: AI models can dive deep into patterns and evaluate countless scenarios or strategic alternatives that humans might miss. This data-driven rigor expands the solution set and can reveal hidden insights (for example, forecasting outcomes of decisions with impressive accuracy).

- Synthesis of Insights: AI can summarize and synthesize diverse inputs into a coherent recommendation. This “meta-opinion” – essentially an AI-generated high-level perspective – condenses knowledge from many sources, giving leaders a balanced starting point informed by evidence.

In short, AI offers scale and analytical muscle that complements human intuition. It gives leaders a richer evidence base and more options to consider – a reason why over 40% of CEOs say they now use generative AI to inform decisions, seeing benefits like better compliance and less biased, more inclusive choices.

The Unique Role of Human Judgment

- Contextual Insight: Humans see the big picture. We understand context, history, and nuance behind the numbers. A statistic or trend from AI can mean different things depending on context – and human leaders are adept at interpreting those subtleties in light of culture, customer relationships, and situational awareness.

- Ethics and Values: People serve as the moral compass in decisions. We weigh ethical considerations, values, and stakeholder impact in ways an AI (which lacks true empathy or morality) cannot. Humans ensure that choices align with principles and understand the human consequences behind the data.

- Experience and Intuition: Seasoned leaders draw on hard-won experience and “gut feeling.” This intuition is pattern recognition shaped by years of learning what works and what doesn’t. It helps spot issues an algorithm might miss and fuels creativity – imagining options beyond what the data suggests.

- Communication and Buy-In: A great decision isn’t just analytically sound; it must be communicated and executed. Human leaders excel at communicating the ‘why’ of a decision, inspiring teams, and building trust. They can persuade stakeholders, rally employees around a vision, and adjust the message with emotional intelligence – tasks well beyond AI’s reach.

In essence, human judgment is the strategic and moral compass. It ensures decisions are not just data-driven, but also contextually appropriate and aligned with core values. Humans provide the “why” and the heart behind the numbers.

AI-Informed Opinion Building Framework: Six Steps to Better Decisions

Combining AI and human insights works best when approached with a structured process. The following six-step framework guides leaders in leveraging AI’s strengths at each stage of decision-making, while keeping human judgment in charge.

1. Define the Question

Every great decision starts with a clear definition of the question or problem. Be specific about what you’re deciding and the criteria for success – this will focus both the AI analysis and the human evaluation. For example, instead of a vague goal like “improve customer experience,” define it as, “How can we improve our customer satisfaction score by 20% within one year?” A well-framed question sets the stage for focused insights.

2. Gather AI Meta-Opinions

With a well-defined question, let AI do what it does best – gather and synthesize information into an initial perspective. Use AI tools to collect relevant data (surveys, market research, performance metrics) and summarize key insights. The AI can even draft recommendations or outline options based on patterns it finds. The goal is to obtain an evidence-based “meta-opinion” – a broad, data-driven view of the issue that would be impossible to compile as quickly manually.

3. Apply Human Subjectivity

Now, bring human judgment into play to scrutinize and enrich the AI’s output. Critically review the AI’s findings: Do they make sense in context? What important nuances or human factors might be missing? At this stage, you apply domain expertise, intuition, and ethics to filter or adjust the AI’s suggestions. This ensures data-driven insights are interpreted correctly and aligned with your organization’s realities and values.

4. Integrate and Weigh

Next, integrate the AI insights with human insights to form a complete picture. Discuss the AI’s recommendations alongside human perspectives. Weigh the evidence: which data points are most compelling, and where does intuition suggest caution or a different tack? In this collaborative step, the team converges on a tentative direction or preferred option, blending the best of analytical evidence and human wisdom.

5. Form the Final Opinion

With analysis and debate done, commit to a decision and articulate it clearly. Choose the option that emerged strongest and formulate the final opinion or strategy. Document the reasoning – how the data and human considerations led to this conclusion. Crucially, a human decision-maker (or team) takes ownership of the call, ready to communicate the “why” to the organization and stakeholders.

6. Stress-Test and Refine

Finally, test the decision and refine it as needed. Before fully rolling out the decision, run pilots or scenario simulations (AI can help simulate outcomes) and gather feedback. See if results align with expectations; if not, adjust the plan. This stress-test step lets you catch flaws or unforeseen issues and improve the decision, so when it’s implemented, it’s far more likely to succeed.

Practical Use Case: Hybrid Work Policy

Example – Hybrid Work Policy: Imagine a company crafting a hybrid work policy. They start by clearly defining the question: how to balance remote flexibility with in-office collaboration to maintain productivity and culture. They then have an AI analyze employee surveys and performance data, plus external research, which reveals employees are productive at home but crave face-to-face interaction for creative work. The leadership reviews these AI insights alongside their own observations – for instance, knowing that new hires learn best on-site. Combining the inputs, they decide on a 3-days-office/2-days-remote policy as the best balance.

The leaders finalize and announce this policy (explaining the data and reasoning behind it), and pilot it in one department. They closely monitor outcomes (with AI dashboards tracking productivity and engagement) and gather feedback, then tweak the policy (e.g. designating common in-office days for all teams) before rolling it out company-wide.

Benefits of Fusing AI and Human Judgment

- More Informed Decisions: Combining AI and human insight means decisions are based on a broader fact base. Leaders can consider far more data and perspectives than they could alone, reducing blind spots and making choices more evidence-based and strategic.

- Reduced Bias: Human biases (and blind spots) are countered by AI’s objectivity, and vice versa. This balance leads to fairer, more objective outcomes because data-driven analysis can challenge gut assumptions, while human values ensure ethical checks on AI’s suggestions.

- Speed and Efficiency: AI can analyze options and gather insights in moments, dramatically speeding up decision cycles. Leaders and teams then spend more time on high-level thinking and discussion, rather than sifting through information. Faster decisions can be made without sacrificing thoroughness.

- Stronger Buy-In: When people see that a decision is backed by solid data and seasoned judgment, it builds confidence. It’s easier to explain and justify decisions to stakeholders (board members, employees, customers) when you can say, “We looked at the data and considered the human factors.” This transparency and rigor make execution smoother, as stakeholders trust the process.

Risks and Challenges of AI-Human Opinion Fusion

- Overreliance on AI: Leaning too heavily on AI can erode human judgment. If people accept AI outputs blindly, they may become complacent and lose critical thinking skills. It’s vital to keep humans in control of big decisions – AI should inform, not replace, human judgment.

- Lack of Trust in AI: On the other hand, some may dismiss AI advice even when it’s valid – a phenomenon known as algorithm aversion. Team members might favor “their gut” over any computer output. Overcoming this requires transparency (explaining how AI arrived at suggestions) and building a track record of small wins so people see AI as a reliable aid, not a black box.

- Data Quality and Bias: AI recommendations are only as good as the data behind them. Bad or biased data can lead to bad advice. For example, if the training data has gaps or reflects past prejudices, the AI might unknowingly perpetuate those. Humans must vigilantly check AI outputs for signs of bias or error and ensure diverse, high-quality data feeds the AI.

- Ethical and Privacy Concerns: AI might propose actions that are efficient but clash with ethics or regulations. It could, say, suggest a cost-cutting measure that harms employee wellbeing, or analyze employee data in ways that raise privacy issues. Human leaders need to vet AI-driven ideas for compliance with laws and alignment with the company’s values, and set boundaries on what AI should and shouldn’t be used for.

- Integration Challenges: Blending AI into decision processes demands new skills and coordination. Teams must learn how to interpret AI insights and resolve disagreements (e.g. when the AI’s suggestion conflicts with a manager’s opinion). There is also a learning curve and cultural change aspect – organizations may need to train staff, update workflows, and foster an open mindset. If not managed well, the collaboration can falter; studies have found human-AI teams sometimes underperform if communication and trust are lacking.

Conclusion

In summary, the fusion of AI analysis and human judgment can elevate decision-making to a new level. In an era of information overload and rapid change, those leaders who skillfully integrate AI’s breadth of insight with human wisdom will gain a strategic edge. The six-step framework outlined above offers a practical guide to achieve this balance in a responsible way, ensuring that AI is used not as a crutch or a black box, but as a powerful tool in the hands of thoughtful decision-makers.

This approach is ultimately about collaboration – between human minds and machine intelligence. It’s not about ceding control to algorithms, nor about ignoring the value of data; it’s about creating a collaborative intelligence where each complements the other’s strengths. The organizations that master this synergy will be the ones to thrive in the coming years. By developing processes that blend computational power with human context and values, executives can lead with decisions that are not only faster and more informed, but also wiser and more principled. The future of strategic leadership belongs to those prepared to leverage the best of both artificial and human intelligence.