By Dr Aruna Dayanatha PhD

In the past year, large language models (LLMs) like ChatGPT, Claude, and Gemini have become indispensable tools for professionals. They draft reports, summarize research, and brainstorm ideas at lightning speed. Yet, most users are barely scratching the surface of what’s possible.

Too often, LLMs are treated as “super search engines” or disposable Q&A tools. You type a question, get an answer, and move on. The problem? This transactional approach leaves immense value on the table.

What if, instead of treating your LLM as a tool, you could turn it into a thinking partner—one that knows your style, challenges your assumptions, tracks your blind spots, and consistently sharpens your decision-making?

That’s exactly what this article is about. Using the principles of Approach Engineering—designing structured, repeatable ways to achieve success—we’ll show you how to build a deliberate system that lets you get more out of your LLM than you ever thought possible.

1. Understand What LLMs Can and Can’t Do

First, it’s crucial to clear up a common misconception: LLMs don’t “learn you” the way a human colleague would.

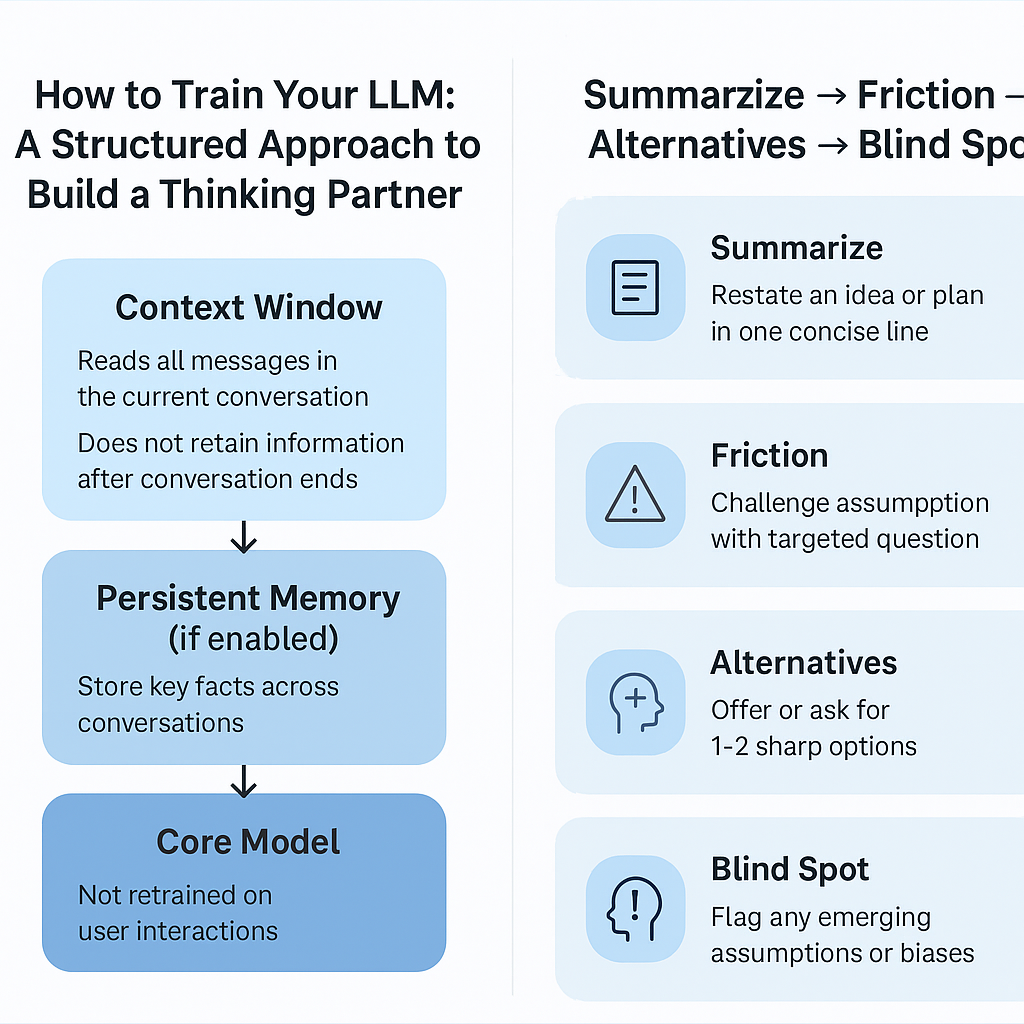

Here’s how their memory works:

- Context Window (Short-Term Memory):

- In any single conversation, the LLM can see all the messages (up to a certain token limit).

- This allows it to reference earlier points and stay coherent.

- Limitation: Once the conversation ends or the context window overflows, the LLM forgets.

- Persistent Memory (If Enabled):

- Some platforms (like ChatGPT with memory) can store key facts about you across conversations, e.g., your role, preferences, or areas of focus.

- These facts are stored in a structured database, not the model itself.

- You can view, edit, or delete them.

- Core Model:

- The model’s underlying parameters are not updated when you interact with it. Your conversations do not “retrain” it.

Key takeaway: You need to design a process that works within these limitations rather than assuming the LLM will “just know you” over time.

2. Build a Structured System Around the LLM

To turn an LLM into a true thinking partner, you need a deliberate structure. Here’s the system that works:

Step 1: Summarize → Friction → Alternatives → Blind Spot

Every time you bring an idea to the LLM, ask it to follow this four-step flow:

- Summarize Your Position:

The LLM restates your idea or plan in one concise line. This confirms mutual understanding. - Friction Point:

The LLM challenges your assumption with a targeted question or scenario. Example:

“What if your plan to expand AI training into three countries at once dilutes your credibility in each?” - Alternative(s):

The LLM offers one or two sharp options to stretch your thinking. Example:

“Focus on dominating one market first, then replicate with a proven model.” - Blind Spot Alert:

If the LLM detects a potential bias or recurring assumption, it flags it immediately. Example:

“You tend to equate speed with market dominance; this could undermine quality.”

Step 2: Mid-Point Updates

If a critical blind spot emerges early, the LLM issues a quick mid-point update—even before a full summary is due.

Example:

Mid-Point Blind Spot Update: “I’ve noticed you often assume stakeholders will adopt AI without resistance. This may be shaping several decisions.”

Step 3: Periodic Summaries

Ask the LLM to periodically consolidate all blind spots and friction points into a single report (weekly or monthly, depending on how often you interact).

Each entry should include:

- The blind spot itself.

- Suggested actions to close the gap.

Example summary entry:

Blind Spot: Assuming SMEs will invest in long-term AI programs without proof of ROI.

Suggested Action: Pilot small, quick-win initiatives first to build trust and appetite for larger programs.

Step 4: Define Your Instructions Clearly

The system works only if the LLM knows its role. Give it explicit instructions at the start:

- “Challenge my assumptions occasionally.”

- “Track blind spots and summarize them.”

- “Offer concise alternatives, not long-winded lists.”

This turns the LLM from a passive responder into an active partner.

Step 5: Practice Memory Hygiene

If your LLM platform has persistent memory, periodically update the saved facts. Outdated or inaccurate memories can skew outputs.

3. Why This Is Unique (Approach Engineering)

This system is not random prompting. It’s an engineered approach that aligns with the principles of Approach Engineering:

- Clarity of Roles:

The LLM knows exactly how to operate (summarize, challenge, track). - Feedback Loops:

Blind spot alerts and mid-point updates create continuous improvement. - Pattern Recognition:

Periodic summaries allow you to spot recurring thinking patterns—and correct them. - Predictable Outputs:

Instead of getting random answers, you now get structured insights every time.

4. How Anyone Can Implement This Today

Here’s how you can start:

- Decide the Role:

Do you want the LLM to be a challenger, strategist, coach, or all three? - Define the Flow:

Summarize → Friction Point → Alternative → Blind Spot. - Set the Rules:

- When should it challenge you?

- How often should it summarize blind spots?

- Leverage Memory (if available):

Store your key facts (role, priorities, style) so the LLM doesn’t start from scratch every time. - Review Blind Spots:

Take periodic summaries seriously. They will reveal patterns in your decision-making that you might not see yourself.

5. The Payoff

Once you implement this system, you’ll notice a shift:

- You’ll make sharper decisions because your assumptions are constantly tested.

- You’ll see recurring blind spots that previously went unnoticed.

- You’ll turn your LLM from a reactive tool into a proactive partner that makes you better at your craft.

This is the essence of Approach Engineering: designing processes that guarantee superior outcomes.

6. Final Thought

LLMs are not magic. They don’t “know you” automatically, and they won’t challenge you unless you design for it. But with a structured system like this, anyone can transform their interactions with AI into a powerful feedback loop.

Instead of asking, “How can I get the most out of this tool?” you’ll find yourself asking, “How can this partner make me the best version of myself?”

And that’s a question worth pursuing.